Keep your META where your DATA is. In the filesystem?

(p.bubestinger@ArkThis.com)November 2023

What’s this all about?

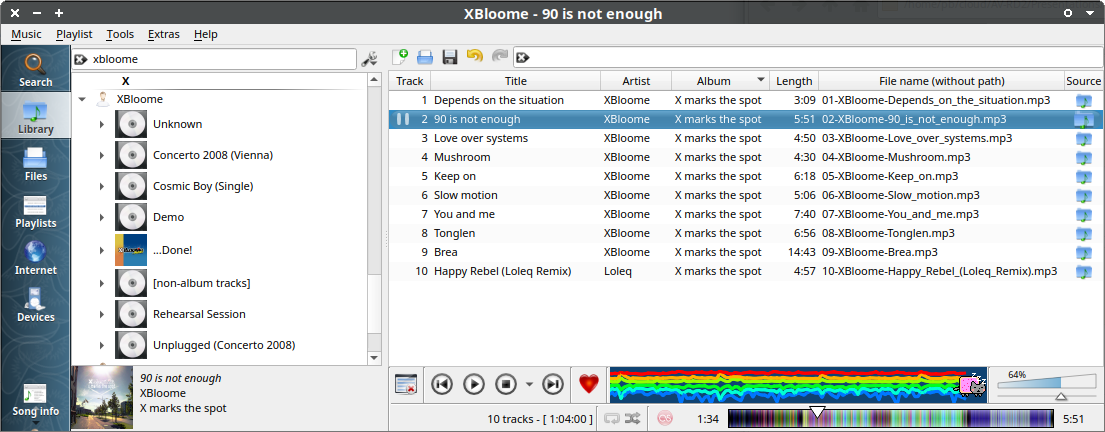

Metadata- and File-Wrangling.

Professional and Private.

My “AHA” Effect…

|

|

What’s with the “Holodeck”?

Yes, I’m trying to be funny.

Yes, I’m very serious about this idea.

My Perception:

Current Project Desires:

“Make things all digital and awesome! And easy. In no-time.”

My Perception:

Disclaimer

|

|

And I apologize: I may be an ad for FOSS.

Existing Components

|

|

Where to begin?

Terms

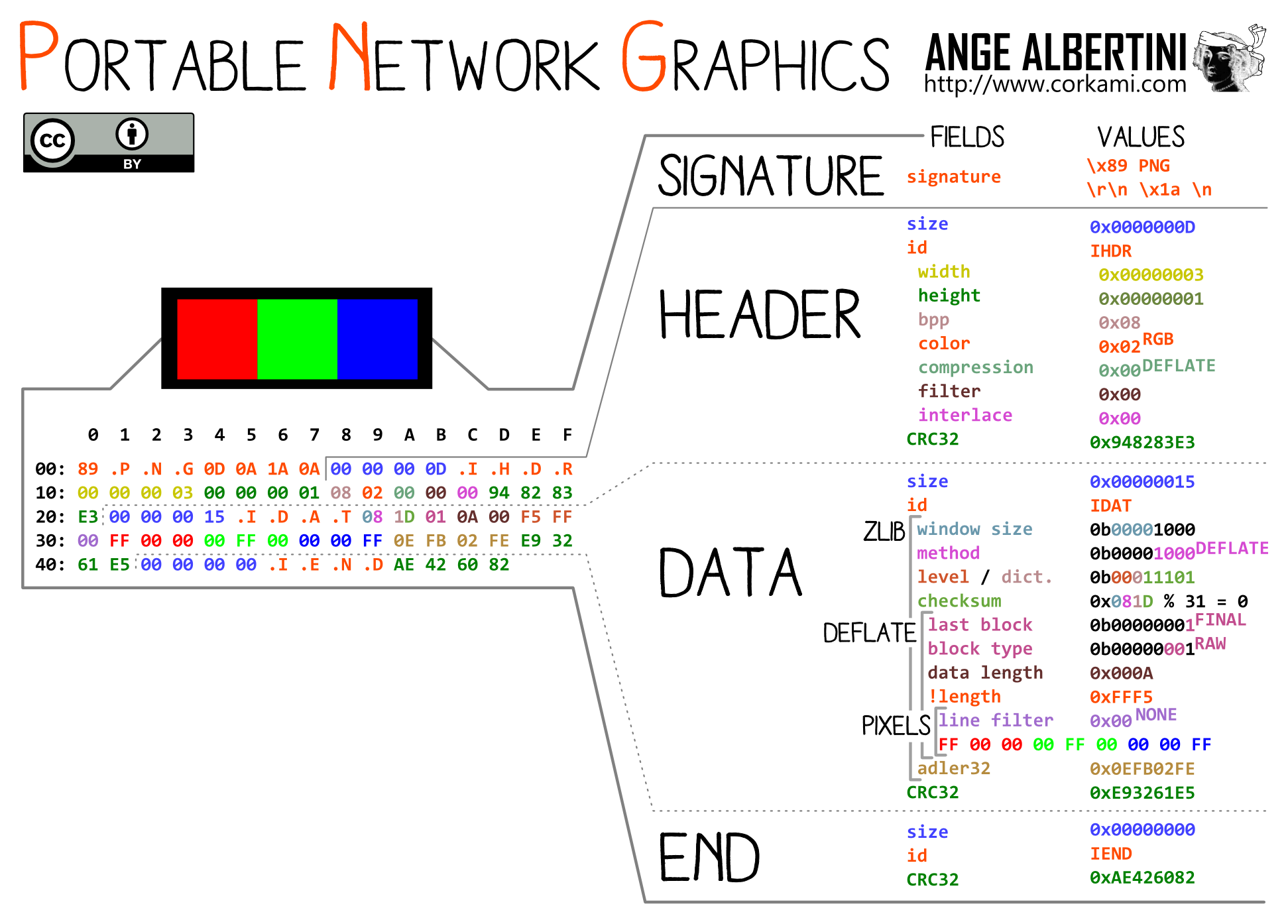

- Files/Folders?

- Metadata?

- Data “payload”?

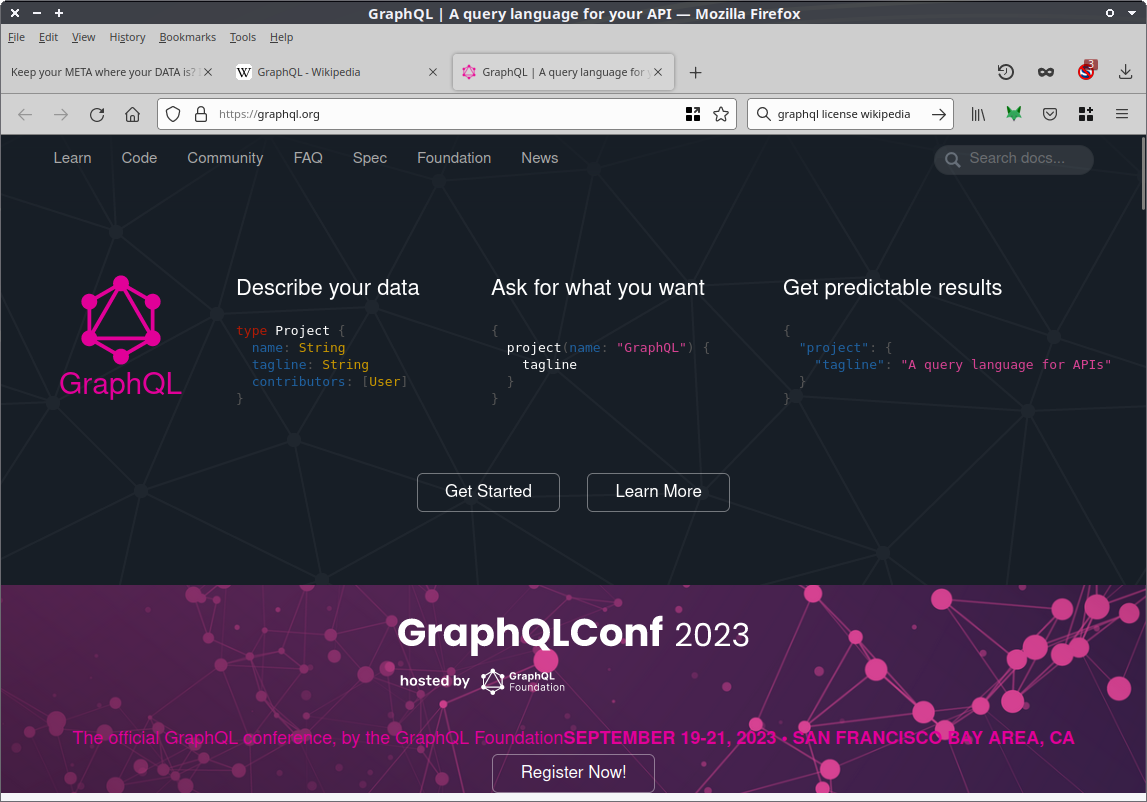

- Data Objects?

- Filesystem?

- All clear?

Status quo: Assigning “a plain title”?

See: “Keywords and Text

Strings” (PNG Specification, W3.org)

And: “What

software can I use to read png metadata?”

The Unix Philosophy

“Everything is a file.” See: https://en.wikipedia.org/wiki/Unix_philosophy

If we translate files to Objects, it becomes…?

The AHA-Holodeck Philosophy

“Everything is an Object.”

|

|

What if you could simply…?

|

|

What if…?

|

|

What if…?

|

|

Metadata-only Objects = Catalog entries

|

|

Interoperability of Features

|

|

Embedded metadata?

What is the use-case for embedding any metadata?

So the meta stays with the data!

Media container formats?

What is the use-case for (media) container formats?

So related data stays together!

Performance?!

|

|

Where to begin (implementing this)?

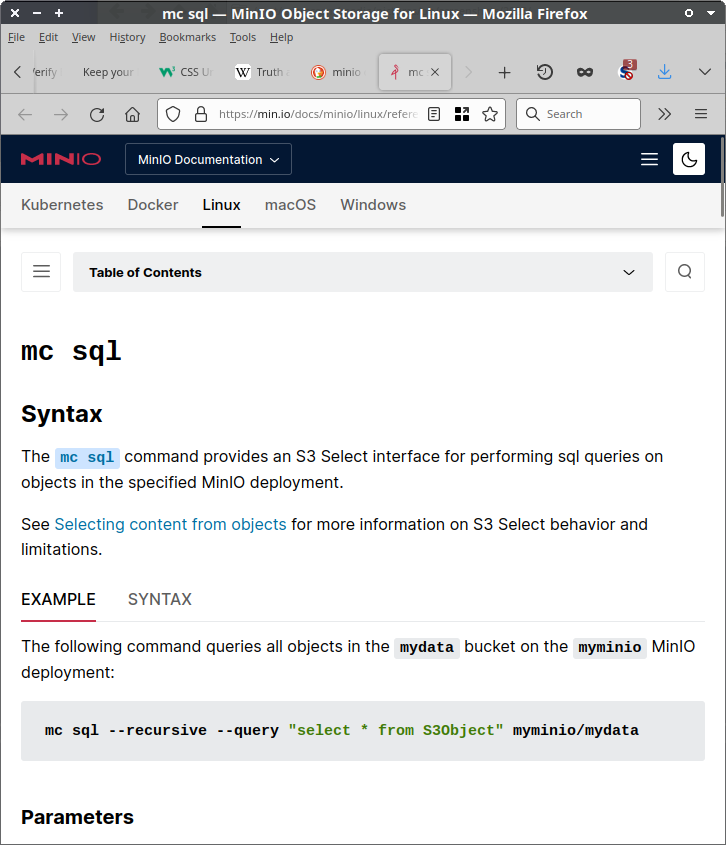

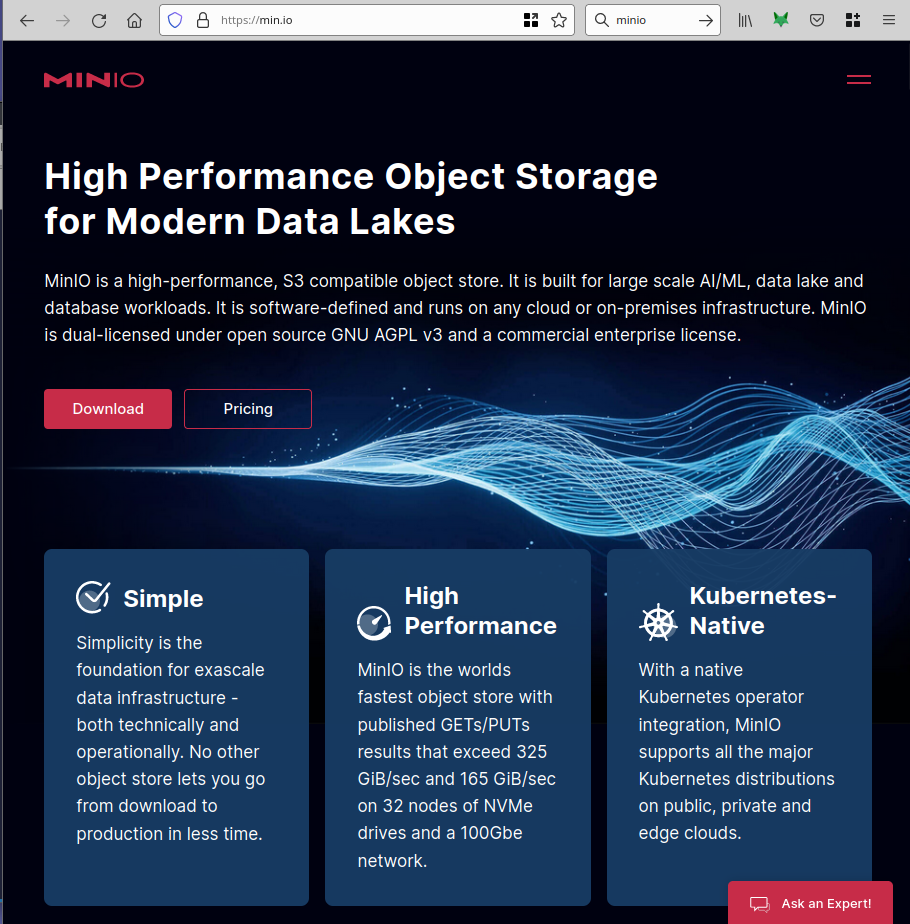

- MinIO?

- NoSQL / MongoDB?

- Search Indexers?

- …More ideas?

What to feed it with?

- Real-world collection (web-)access copies.

- Corresponding data (catalog) entries. (XML, JSON, CSV, etc)

- …More ideas?

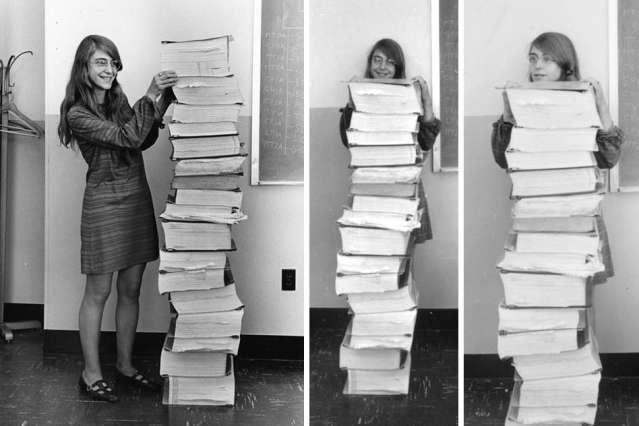

The rabbit hole goes deeper.

But that’s a story for another time…

If such an implementation/prototype is not awesome…

…then it’s not what I suggest here 😎️

(Or simply not finished yet)

Oh, btw:

|

|

Ideas? Input? Questions?

Please! Go ahead. Now and later :)

Peter Bubestinger-Steindl

Peter@ArkThis.com

https://github.com/ArkThis/AHA_ObjectWorld/

https://diode.av-rd.com/nextcloud/index.php/s/z2M4JZY8RFt8Nnd

CC-BY-SA